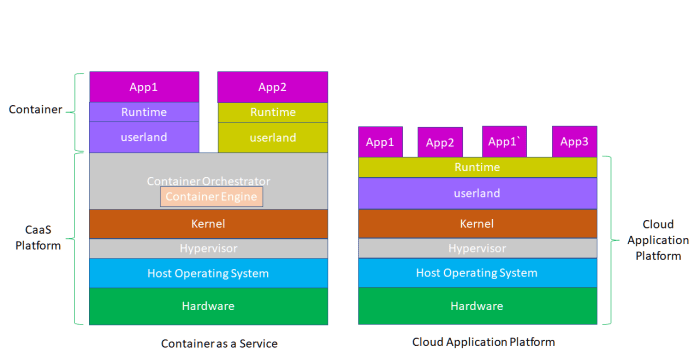

Cloud container servers are totally changing the game for app deployment. Think of them as lightweight, portable packages that hold everything your app needs – code, libraries, runtime – all neatly bundled. This means easier scaling, faster deployments, and way less headache compared to traditional virtual machines. We’ll unpack the nuts and bolts of this tech, from choosing the right orchestration platform to optimizing costs and security.

We’ll explore different cloud providers like AWS, Google Cloud, and Azure, comparing their container services and helping you decide which one best fits your needs. We’ll also cover security best practices – because keeping your apps safe is, like, super important. Plus, we’ll dive into scaling, performance optimization, and cost management strategies to make sure your containerized apps run smoothly and efficiently.

Security Considerations for Cloud Container Servers

Containerization offers amazing scalability and efficiency, but it also introduces a new attack surface. Understanding and mitigating security risks is crucial for a successful and secure cloud container deployment. Failing to address these vulnerabilities can lead to data breaches, service disruptions, and significant financial losses. This section Artikels key security considerations and best practices.

Common Container Security Vulnerabilities

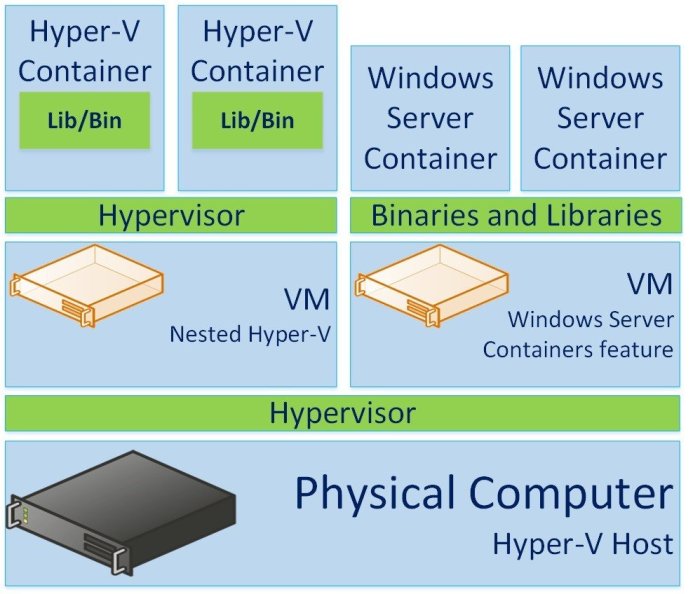

Containers inherit vulnerabilities from their underlying images and the host system. Common vulnerabilities include insecure image configurations (exposed ports, unnecessary services), vulnerabilities in the base operating system image, and insufficient access control within the containerized environment. Compromised container images can spread malware rapidly across a cluster, leading to widespread damage. Furthermore, weak network configurations and lack of proper monitoring can easily lead to unauthorized access and data exfiltration.

For example, a container running with root privileges and an exposed port could be easily exploited by a malicious actor.

Securing Container Images and Registries

Secure container images are paramount. This involves using minimal base images, regularly scanning images for vulnerabilities using tools like Clair or Trivy, and employing multi-stage builds to reduce the attack surface. Images should only include necessary packages and dependencies. Regularly updating base images and patching known vulnerabilities is also critical. Secure registries, such as those offered by cloud providers, should be used to store and manage container images.

Access control lists (ACLs) and image signing mechanisms should be implemented to ensure only authorized users can push and pull images. Employing robust authentication and authorization mechanisms for registry access, such as RBAC (Role-Based Access Control), is vital to prevent unauthorized access and image tampering.

Security Strategy for Cloud Container Server Deployment

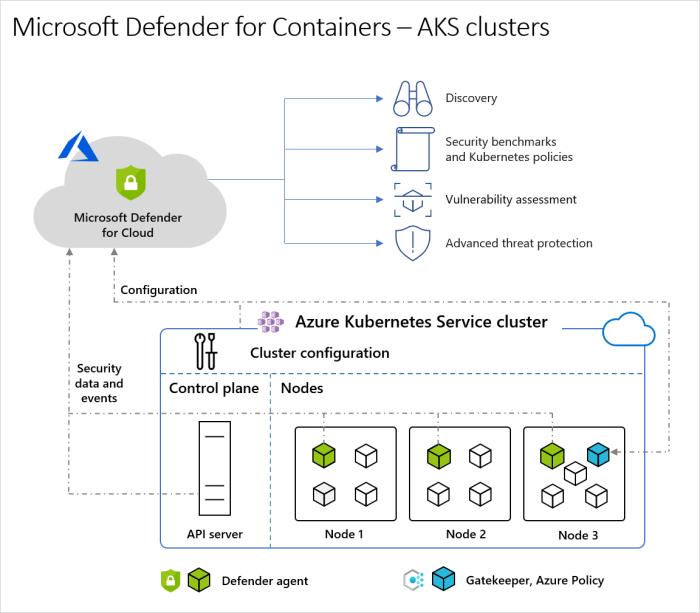

A comprehensive security strategy should include network segmentation to isolate containers and limit the impact of breaches. This can be achieved using virtual networks (VPCs) and security groups within cloud providers. Access control should be implemented at multiple layers: network level, container level, and application level. Using tools like Kubernetes Role-Based Access Control (RBAC) allows for granular control over access to resources.

Regular security audits and penetration testing should be conducted to identify and address vulnerabilities before they can be exploited. Implementing a robust logging and monitoring system is also essential for detecting and responding to security incidents promptly. This involves centralized logging, intrusion detection, and security information and event management (SIEM) systems.

Potential Attack Vectors and Mitigation Techniques

Attack vectors against cloud container servers include exploiting vulnerabilities in the container runtime, compromising the host operating system, or targeting the container orchestration platform (e.g., Kubernetes). Mitigation techniques include using a secure container runtime, regularly patching the host operating system, and implementing strong authentication and authorization for the orchestration platform. Regular security scans and vulnerability assessments are critical.

Additionally, employing techniques like least privilege access, where containers only have the minimum necessary permissions, significantly reduces the potential impact of a compromise. Network policies within the orchestration platform can further restrict communication between containers and external networks, preventing lateral movement of an attacker. Finally, implementing a robust incident response plan allows for a coordinated and effective response to security incidents.

Scalability and Performance Optimization of Cloud Container Servers

Containerization offers amazing scalability and performance benefits, but realizing that potential requires a strategic approach. Understanding how to scale your applications and optimize their performance within the cloud container environment is key to building robust and efficient systems. This section explores techniques for achieving both horizontal and vertical scaling, optimizing application performance, and leveraging load balancing and auto-scaling for optimal resource utilization.

Horizontal Scaling

Horizontal scaling involves adding more instances of your application to handle increased load. Instead of upgrading a single server, you deploy multiple containers across a cluster of machines. This distributes the workload, improving responsiveness and preventing a single point of failure. For example, imagine a photo-sharing app experiencing a surge in users. Instead of upgrading the single server running the application, you simply spin up more containers, each handling a portion of the user requests.

This approach is generally preferred for its flexibility and ease of implementation. It’s crucial to design your application to be stateless, ensuring that each container can operate independently and seamlessly handle requests without relying on data stored locally within a specific container.

Vertical Scaling

Vertical scaling, on the other hand, involves increasing the resources (CPU, memory, storage) allocated to an existing container or server. This is a simpler approach for smaller applications or temporary spikes in traffic. However, vertical scaling has limitations. Eventually, you’ll reach the limits of a single machine’s capacity. For instance, you might initially handle increased traffic by allocating more RAM to your existing containers.

However, once you hit the maximum RAM available on the host machine, you’ll need to switch to horizontal scaling to further expand your application’s capacity.

Performance Optimization Techniques

Optimizing the performance of containerized applications requires a multi-faceted approach. Efficient code, optimized images, and proper resource allocation are all crucial. Using smaller container images reduces the time it takes to start containers and lowers the overall resource consumption. Employing techniques like caching, connection pooling, and asynchronous operations can significantly improve application responsiveness. Profiling your application to identify performance bottlenecks is essential for targeted optimization efforts.

For example, identifying slow database queries or inefficient algorithms allows for focused improvements.

Load Balancing and Auto-Scaling

Load balancing distributes incoming traffic across multiple containers, preventing any single container from becoming overloaded. This ensures consistent performance and high availability. Auto-scaling automatically adjusts the number of containers based on real-time demand. When traffic increases, more containers are automatically provisioned; when traffic decreases, extra containers are scaled down, optimizing resource utilization and cost efficiency. This dynamic scaling ensures your application can handle fluctuating workloads without manual intervention.

A good example is a cloud provider’s load balancer distributing traffic across a fleet of containers running a web application, preventing performance degradation during peak hours.

When investigating detailed guidance, check out cloud computing server usage now.

Resource Utilization Monitoring and Bottleneck Identification

Effective monitoring is vital for identifying performance bottlenecks. Tools like Prometheus, Grafana, and Datadog provide real-time visibility into CPU utilization, memory consumption, network I/O, and other key metrics. By analyzing these metrics, you can pinpoint areas needing optimization. For example, consistently high CPU utilization on a specific container might indicate the need for more powerful hardware or code optimization.

Similarly, high memory consumption could point to memory leaks or inefficient data structures within your application. Regular monitoring allows for proactive identification and resolution of performance issues before they impact users.

Cost Optimization for Cloud Container Servers

Running cloud container servers can be incredibly efficient, but keeping costs in check requires a proactive approach. Understanding pricing models, optimizing resource allocation, and employing smart strategies are crucial for maximizing your return on investment. This section explores key methods to significantly reduce your cloud container spending without sacrificing performance or functionality.

Strategies for Reducing Costs

Several strategies can significantly reduce your cloud container server expenses. Right-sizing your instances based on actual resource needs, rather than over-provisioning, is a major cost saver. Leveraging spot instances or preemptible VMs for non-critical workloads can drastically reduce costs, as these offer significant discounts compared to on-demand pricing. Auto-scaling based on real-time demand ensures you only pay for the resources you actively use, preventing wasted spending on idle capacity.

Finally, regularly reviewing and optimizing your container images to minimize their size can lead to faster deployments and reduced storage costs.

Optimizing Resource Allocation and Utilization

Efficient resource utilization is paramount for cost optimization. Employing container orchestration tools like Kubernetes allows for granular control over resource allocation, ensuring that containers receive only the resources they need. Regular monitoring of resource consumption helps identify bottlenecks and areas for improvement. Techniques like resource quotas and limits prevent resource hogging by individual containers, ensuring fair sharing and preventing unexpected spikes in costs.

For example, if you notice a specific container consistently consuming excessive CPU, you can adjust its resource limits to prevent it from impacting other containers and increasing your overall bill.

Cloud Provider Pricing Models for Container Services

Cloud providers typically offer various pricing models for container services. The most common are pay-as-you-go (on-demand), where you pay for the compute resources consumed hourly or per second. Reserved instances provide discounts for committing to a longer-term usage, ideal for stable workloads. Spot instances offer significantly lower prices for less critical workloads that can tolerate interruptions. Finally, some providers offer container-specific pricing plans, often based on the number of nodes or containers managed.

Choosing the appropriate pricing model depends heavily on the nature and predictability of your workload. For example, a highly scalable application with fluctuating demand might benefit from a combination of on-demand and spot instances, while a stable application could leverage reserved instances for cost savings.

Cost Optimization Checklist for Cloud Container Server Deployments

Before deploying, and regularly thereafter, it’s crucial to systematically review your cloud container costs. This checklist provides a structured approach:

- Right-size your container instances: Avoid over-provisioning resources.

- Utilize auto-scaling: Scale resources up or down based on demand.

- Explore spot instances: Leverage discounts for non-critical workloads.

- Optimize container images: Minimize image size for faster deployments and reduced storage costs.

- Implement resource quotas and limits: Prevent resource hogging and ensure fair sharing.

- Regularly monitor resource consumption: Identify and address bottlenecks.

- Leverage cost optimization tools: Many cloud providers offer tools to analyze and optimize spending.

- Choose the appropriate pricing model: Select a model aligned with your workload characteristics.

- Consider serverless containers: Evaluate the suitability of serverless functions for specific tasks.

- Implement efficient logging and monitoring: Reduce unnecessary data storage and processing costs.

Case Studies of Cloud Container Server Implementations

This section examines real-world examples of cloud container server deployments across diverse industries, highlighting successful implementations, challenges encountered, and solutions adopted. Analyzing these case studies provides valuable insights into best practices and potential pitfalls to avoid when designing and implementing your own containerized infrastructure.

Netflix’s Microservices Architecture, Cloud container server

Netflix, a pioneer in cloud computing, famously transitioned to a microservices architecture built on containers. This allowed them to independently deploy and scale individual components of their platform, leading to increased agility and resilience. Challenges included managing the complexity of a vast number of microservices and ensuring consistent deployment across multiple regions. Solutions involved sophisticated orchestration tools like Kubernetes and a robust monitoring system to track performance and identify potential issues.

The transition, though complex, significantly improved Netflix’s ability to deliver features and handle massive traffic spikes during peak demand.

- Improved agility and faster feature releases.

- Increased resilience through independent service deployments.

- Enhanced scalability to handle fluctuating user demand.

- Significant complexity in managing a large microservices ecosystem.

Spotify’s Containerized Platform

Spotify also leverages containers extensively for its music streaming service. Their implementation focuses on a highly decentralized approach, enabling teams to operate independently. They faced challenges related to maintaining consistency across various services and ensuring efficient resource utilization. Solutions included implementing strict guidelines for container image creation and utilizing advanced monitoring and logging tools. This strategy has allowed Spotify to maintain a fast-paced development cycle while delivering a seamless user experience.

- Independent team operation and faster development cycles.

- Efficient resource utilization through optimized container images.

- Challenges in maintaining consistency and managing decentralized infrastructure.

- Improved scalability and resilience through robust monitoring and logging.

A Large E-commerce Company’s Containerization for Peak Season

A major e-commerce company utilized containerization to handle the massive traffic spikes experienced during peak shopping seasons like Black Friday and Cyber Monday. Their primary challenge was scaling their infrastructure rapidly and cost-effectively to meet unpredictable demand. They successfully addressed this by implementing auto-scaling features within their Kubernetes clusters, enabling the dynamic provisioning and de-provisioning of containers based on real-time traffic patterns.

This approach minimized infrastructure costs while ensuring a smooth shopping experience for customers even under extreme load.

- Successful handling of peak traffic and unpredictable demand.

- Cost-effective scaling through auto-scaling features in Kubernetes.

- Challenge of predicting and managing unpredictable traffic spikes.

- Improved customer experience during peak seasons.

So, there you have it – a whirlwind tour of cloud container servers! From understanding the basics to mastering advanced deployment strategies and optimization techniques, we’ve covered a lot of ground. Remember, the key to success with cloud container servers lies in choosing the right tools for your project, implementing robust security measures, and continuously optimizing for performance and cost.

Now go forth and containerize!

Answers to Common Questions

What’s the difference between Docker and Kubernetes?

Docker creates individual containers, while Kubernetes orchestrates and manages those containers at scale across multiple machines.

How do I choose a cloud provider for container services?

Consider factors like pricing, features offered (managed Kubernetes services, etc.), existing infrastructure, and your team’s familiarity with the provider’s tools.

Are cloud container servers secure?

Security is crucial. Implement strong security practices throughout the entire lifecycle, from image building to runtime environment configuration and access control.

What are some common container security vulnerabilities?

Common vulnerabilities include insecure images, misconfigured networks, insufficient access control, and vulnerabilities in the orchestration platform itself.