Cloud computing vs server farm – Cloud computing vs server farm: It’s a debate that’s been raging for years, and for good reason! Both offer ways to store and process data, but their approaches, costs, and overall vibes are wildly different. Think of it like this: server farms are like owning a massive, high-maintenance house – you’re responsible for

-everything*. Cloud computing?

More like renting a sleek apartment; someone else handles the plumbing and electrical work (most of the time!). This comparison dives into the nitty-gritty of each, exploring which option best fits your needs, budget, and tolerance for tech headaches.

We’ll break down the key differences, from cost and scalability to security and environmental impact. We’ll explore the pros and cons of each, helping you decide which infrastructure is the right fit for your project, whether you’re a small startup or a massive corporation. Get ready to geek out on all things cloud and server!

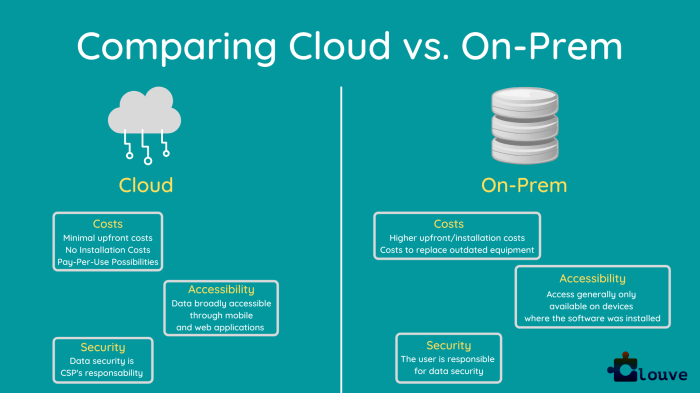

Cost Comparison

Choosing between cloud computing and a server farm involves a significant financial decision. The total cost of ownership (TCO) for each option varies dramatically depending on factors like business size, specific needs, and chosen service levels. This comparison highlights the key cost differences, helping you make an informed choice.

Understanding the cost structure is crucial. Cloud computing typically involves a mix of variable and fixed costs, while server farms lean more heavily on upfront fixed investments with ongoing maintenance costs. This impacts budgeting and long-term financial planning significantly.

Total Cost of Ownership Comparison

The following table compares the TCO for cloud computing and server farms across three different business sizes: small, medium, and large. These figures are estimations based on industry averages and should be considered illustrative rather than precise. Actual costs will vary greatly depending on specific requirements and vendor choices.

| Cost Category | Small Business (Cloud) | Small Business (Server Farm) | Medium Business (Cloud) | Medium Business (Server Farm) | Large Business (Cloud) | Large Business (Server Farm) |

|---|---|---|---|---|---|---|

| Initial Investment | $0 – $1000 | $10,000 – $50,000 | $1000 – $5000 | $50,000 – $250,000 | $5000 – $25,000 | $250,000 – $1,000,000+ |

| Ongoing Maintenance | $100 – $500/month | $500 – $2500/month | $500 – $2500/month | $2500 – $10,000/month | $2500 – $10,000+/month | $10,000+ /month |

| Scalability Costs | Highly Variable, generally low | High, requires significant upfront investment | Variable, moderate | High, significant infrastructure upgrades | Variable, potentially high but manageable | Extremely high, major infrastructure overhauls |

| Total Estimated Annual Cost | $1200 – $6000 | $6000 – $30,000+ | $6000 – $30,000 | $30,000 – $120,000+ | $30,000 – $120,000+ | $120,000+ |

Variable vs. Fixed Costs

Cloud computing emphasizes variable costs. You pay for what you use, scaling resources up or down as needed. This flexibility is a major advantage, allowing businesses to adapt to fluctuating demands without large upfront investments. Fixed costs are primarily associated with subscription fees and potentially data transfer charges. In contrast, server farms are characterized by substantial fixed costs.

These include the initial hardware purchase, facility rental, and ongoing maintenance contracts. Variable costs are mainly related to power consumption and potential hardware upgrades.

Hidden Costs

Both cloud computing and server farms have potential hidden costs that can significantly impact the overall budget. Careful planning and thorough vendor due diligence are crucial to avoid unpleasant surprises.

For cloud computing, hidden costs can include:

- Data transfer charges exceeding free tiers.

- Unexpected costs associated with complex configurations or specialized services.

- Charges for support beyond basic levels.

- Costs related to data egress (transferring data out of the cloud).

For server farms, hidden costs can include:

- Unexpected maintenance and repair expenses.

- Costs associated with network upgrades and security enhancements.

- Staffing costs for IT administration and maintenance.

- Facility costs beyond initial estimates, such as power or cooling upgrades.

Scalability and Flexibility

Cloud computing and server farms offer vastly different approaches to scaling and adapting to changing demands. While both can handle growth, the ease and cost-effectiveness of scaling differ significantly, impacting a business’s agility and responsiveness. The core difference lies in the inherent infrastructure model: cloud computing offers on-demand resources, while server farms require upfront investment and more manual management.

Cloud computing’s scalability is its biggest selling point. Need more processing power? Just spin up more virtual machines. Need more storage? Increase your cloud storage allocation.

This on-demand nature allows for rapid scaling to meet unexpected spikes in demand, like a viral marketing campaign or a sudden surge in online shoppers during a holiday sale. Conversely, scaling a server farm is a much more involved process. Adding servers requires purchasing hardware, configuring the servers, integrating them into the existing network, and potentially upgrading network infrastructure.

This process can take days or even weeks, making it difficult to react quickly to unexpected demand fluctuations.

Resource Allocation and Customization

Cloud computing provides unparalleled flexibility in resource allocation. You can easily adjust the computing power, memory, and storage assigned to your applications based on real-time needs. Need more RAM for a specific application? Just allocate it. This granular control allows for optimized resource utilization and cost savings.

Server farms, on the other hand, offer less granular control. While you can configure individual servers, changing resource allocation often requires reconfiguring the entire server or even replacing it, which is time-consuming and potentially disruptive. Customization options are also more limited with server farms, often requiring specialized expertise and potentially custom-built software solutions. Cloud providers, however, offer a wide array of pre-built services and tools that simplify customization.

Hypothetical Scenario: Sudden Traffic Surge

Imagine a popular online retailer experiencing a massive surge in traffic due to a highly successful Black Friday sale. With cloud computing, the retailer’s application automatically scales up to handle the increased load. Additional virtual machines are spun up instantly, distributing the traffic and ensuring a smooth user experience. As the traffic subsides, these extra resources are automatically scaled down, minimizing costs.In contrast, a retailer relying solely on a server farm would face a much more challenging situation.

If their existing servers are overwhelmed, users will experience slowdowns or even outages. Adding more servers to the farm to handle the increased load would be a lengthy process, potentially resulting in lost sales and damage to the brand’s reputation. The response time is significantly slower compared to the immediate scalability offered by cloud computing. This delay in scaling can translate directly into lost revenue and frustrated customers.

Even if they had spare capacity, shifting the load would be complex and potentially disruptive.

Security Considerations: Cloud Computing Vs Server Farm

Choosing between cloud computing and a server farm involves a careful evaluation of security implications. Both options present unique challenges and require different approaches to risk management. Understanding these differences is crucial for making an informed decision that aligns with your organization’s security posture and risk tolerance. The level of security offered isn’t inherently better in one over the other; it depends on the specific implementation and the diligence of the involved parties.Cloud computing and server farms employ diverse security measures, but their implementation and responsibility differ significantly.

While cloud providers handle much of the infrastructure security, organizations retain responsibility for their data and applications within the cloud environment. On the other hand, organizations managing their own server farms bear the entire burden of infrastructure and application security.

Security Measures in Cloud Computing and Server Farms

Cloud providers typically invest heavily in robust physical and network security, including firewalls, intrusion detection systems, and data encryption at rest and in transit. They also offer various security services like access control lists (ACLs), virtual private clouds (VPCs), and security information and event management (SIEM) systems. In contrast, server farms rely on the organization’s own security team to implement and maintain these measures, often at a higher initial cost but with potentially greater control.

A key difference lies in the scale: cloud providers manage security across massive infrastructure, benefiting from economies of scale and specialized expertise. Server farms, however, require dedicated personnel to handle these tasks, potentially straining resources in smaller organizations.

Potential Security Vulnerabilities

Cloud computing’s shared responsibility model introduces vulnerabilities related to misconfigurations, insecure APIs, and vulnerabilities in third-party applications. Data breaches resulting from misconfigured access controls or compromised credentials are common concerns. In server farms, the risk of physical security breaches, such as unauthorized access to hardware or data centers, is a significant concern. Furthermore, the responsibility for patching and updating software and operating systems rests solely with the organization, potentially leading to vulnerabilities if not managed diligently.

A notable example is the Equifax data breach in 2017, highlighting the consequences of neglecting timely patching of known vulnerabilities in a server farm environment.

Check what professionals state about cloud computing uses server virtualization a true b false and its benefits for the industry.

Responsibilities for Security Management, Cloud computing vs server farm

The responsibilities for security management differ substantially between cloud computing and server farms.

- Cloud Computing:

- Cloud Provider: Responsible for the security

-of* the cloud (physical infrastructure, network security, etc.). - Organization: Responsible for security

-in* the cloud (data security, application security, access control, etc.).

- Cloud Provider: Responsible for the security

- Server Farms:

- Organization: Responsible for all aspects of security, including physical security, network security, data security, application security, and compliance.

This shared responsibility model in cloud computing, while often beneficial in terms of cost and expertise, necessitates a clear understanding of the boundaries of responsibility between the provider and the organization. Failing to address this can lead to security gaps and increased risk. In server farms, the centralized responsibility, while offering greater control, demands a higher level of internal expertise and resources dedicated to security management.

Performance and Reliability

Choosing between cloud computing and a server farm often hinges on performance and reliability needs. Both offer robust solutions, but their strengths lie in different areas, impacting how quickly applications run and how consistently they’re available. Understanding these differences is key to making an informed decision.Performance in cloud computing and server farms is affected by a multitude of factors.

Network latency, for instance, plays a significant role. Cloud services, distributed across multiple data centers, might experience higher latency depending on geographic location and network congestion. Server farms, on the other hand, often benefit from localized networks, potentially leading to lower latency if all components are geographically close. Hardware failures also impact performance and reliability. Cloud providers employ redundancy and failover mechanisms, mitigating the impact of individual hardware issues.

Server farms, while also capable of redundancy, might require more proactive management to ensure consistent uptime.

Network Latency and Geographic Distribution

Network latency, the delay in data transmission, significantly impacts application responsiveness. Cloud providers often use Content Delivery Networks (CDNs) to minimize latency by caching data closer to users. However, if a user is far from the nearest data center, latency can still be an issue. Server farms, especially smaller ones, might not have the geographic reach of cloud providers.

But if the farm is strategically located close to the user base, it could offer superior performance in terms of lower latency. Consider, for example, a video streaming service. A cloud-based solution could leverage CDNs to serve content to users globally with minimal delay, whereas a smaller server farm might struggle to provide the same level of global accessibility and performance.

Hardware Failures and Redundancy

Hardware failures are inevitable. Cloud providers mitigate this risk through massive redundancy. They typically replicate data and applications across multiple data centers and servers. If one server fails, another instantly takes over, ensuring minimal disruption. Server farms also employ redundancy, but the scale is usually smaller.

The implementation and maintenance of redundancy in a server farm often require more hands-on management and a deeper understanding of the infrastructure. The failure of a critical component in a server farm could lead to significant downtime without robust redundancy measures in place. Imagine a financial institution: the impact of a prolonged outage in either a cloud or server farm environment could be catastrophic, but the cloud’s inherent redundancy offers a better chance of minimizing disruption.

Impact of Downtime on Business

The following table illustrates the potential financial impact of downtime for a business using either cloud computing or a server farm. These figures are illustrative and would vary significantly based on business size, industry, and specific service level agreements (SLAs).

| Downtime (Hours) | Cloud Computing (Estimated Revenue Loss) | Server Farm (Estimated Revenue Loss) | Potential Additional Costs |

|---|---|---|---|

| 1 | $5,000 | $10,000 | Customer support, IT recovery |

| 4 | $20,000 | $40,000 | Reputational damage, lost sales |

| 24 | $100,000+ | $200,000+ | Legal action, business interruption insurance claims |

Environmental Impact

The environmental impact of both cloud computing and server farms is a significant concern in today’s tech landscape. While both contribute to energy consumption and carbon emissions, the distributed nature of cloud computing and the advancements in energy efficiency present a complex picture, defying simple comparisons. Let’s delve into the specifics.The energy consumption of a traditional server farm is largely localized, making its environmental impact relatively easier to assess.

In contrast, the distributed nature of cloud computing makes it challenging to pinpoint the exact environmental footprint, as it’s spread across numerous data centers globally. However, advancements in virtualization, efficient cooling systems, and renewable energy adoption by cloud providers are changing the equation.

Energy Consumption and Carbon Footprint

The sheer scale of server farms necessitates substantial energy consumption for powering servers, cooling systems, and other infrastructure. This high energy demand directly translates into a significant carbon footprint, primarily through greenhouse gas emissions from electricity generation. Cloud computing, while also energy-intensive, benefits from economies of scale and optimized resource utilization. Data centers often leverage techniques like virtualization to consolidate workloads, reducing the overall number of physical servers needed and, consequently, energy consumption.

However, the distributed nature of cloud resources makes accurate assessment complex, requiring comprehensive life-cycle assessments encompassing manufacturing, operation, and disposal. This complexity requires detailed analysis that considers various factors such as location, energy source, and cooling efficiency.

Sustainability Initiatives by Major Cloud Providers

Major cloud providers like Amazon Web Services (AWS), Google Cloud, and Microsoft Azure are increasingly prioritizing sustainability. Their initiatives include investments in renewable energy sources, such as wind and solar power, to offset their carbon footprint. They also implement energy-efficient cooling technologies and optimize their data center designs to minimize energy waste. Many providers publicly report their carbon emissions and sustainability goals, aiming for carbon neutrality or net-zero emissions in the coming years.

For example, AWS has committed to powering its operations with 100% renewable energy by 2025, while Google aims for 24/7 carbon-free energy for its operations by 2030. These commitments demonstrate a growing awareness of environmental responsibility within the industry.

Environmental Differences: Data and Statistics

It’s difficult to provide exact figures comparing cloud computing and server farms due to the varying sizes, locations, and operational efficiencies of both. However, we can illustrate the potential differences with hypothetical examples.

- Scenario 1: A small business utilizes a dedicated server farm for its operations. The energy consumption might be 100 kWh per day, resulting in a substantial carbon footprint depending on the electricity source. This contrasts with a similar business using cloud services, where energy consumption could be significantly lower due to shared resources and efficient data center management.

- Scenario 2: A large enterprise with a massive server farm might consume 10,000 kWh per day. This high energy demand leads to a considerable carbon footprint. A cloud-based equivalent, while still energy-intensive, could potentially achieve a lower carbon footprint through the utilization of renewable energy sources and optimized data center infrastructure by the cloud provider. This highlights the potential for scale and efficiency improvements with cloud computing.

Note: These are hypothetical examples for illustrative purposes. Actual energy consumption and carbon footprints vary significantly based on various factors. Comprehensive life-cycle assessments are necessary for accurate comparisons.

Ultimately, the “better” choice between cloud computing and a server farm hinges entirely on your specific requirements. Small businesses might find the flexibility and cost-effectiveness of cloud computing more appealing, while large enterprises with stringent security needs or specialized hardware requirements may lean towards a server farm. Understanding the trade-offs – cost vs. control, scalability vs. security, and flexibility vs.

complexity – is key. Hopefully, this deep dive has armed you with the knowledge to make an informed decision, and remember, the tech world is always evolving, so stay curious!

Answers to Common Questions

What are the biggest security risks associated with each?

Server farms face risks like physical security breaches and on-site hardware failures. Cloud computing has vulnerabilities related to data breaches and reliance on third-party security.

How much does each option typically cost?

Costs vary wildly based on needs, but generally, server farms involve higher upfront investment but potentially lower long-term costs for large-scale operations. Cloud computing usually has lower upfront costs but can become expensive with high usage.

Which is better for disaster recovery?

Cloud computing often provides superior disaster recovery options due to built-in redundancy and geographically distributed data centers. Server farms require robust, often custom-built, backup and recovery systems.

What level of technical expertise is needed for each?

Server farms demand significant in-house IT expertise for management and maintenance. Cloud computing often requires less specialized knowledge, but some understanding of cloud platforms is beneficial.