Cloud computing verses server – Cloud computing versus server: It’s a debate that’s been raging for years, and for good reason! Choosing between these two approaches is a big decision for any business, impacting everything from your budget to your security. This deep dive will unpack the core differences, exploring the pros and cons of each to help you make an informed choice.

We’ll cover cost, scalability, security, performance, and more, giving you a clear picture of which solution best fits your needs.

We’ll start by defining both cloud computing and servers, exploring different cloud deployment models (public, private, hybrid) and contrasting the physical server hardware with the virtualized resources of the cloud. Then, we’ll dive into the nitty-gritty of cost comparisons, security measures, and performance benchmarks. Finally, we’ll examine practical use cases and which type of infrastructure best suits specific applications.

Get ready to level up your understanding of this crucial tech decision!

Defining Cloud Computing and Servers

Okay, so let’s break down cloud computing and servers. They’re related but definitely not the same thing. Think of it like this: servers are the physical machines, the actual hardware, while cloud computing is a way of using and accessing those servers, or rather, their resources, in a more flexible and scalable manner.Cloud computing fundamentally changes how we think about IT infrastructure.

Instead of owning and maintaining all the hardware yourself, you rent computing resources—processing power, storage, networking—from a provider. This provider manages the underlying infrastructure, letting you focus on your applications and data.

Cloud Computing Architectures

The basic architecture of a cloud environment involves several key components working together. You’ve got the physical servers, of course, but also virtualization technologies that allow multiple virtual servers to run on a single physical machine. Then there’s networking infrastructure connecting everything, and sophisticated management software to handle resource allocation, security, and monitoring. The whole system is designed for scalability and redundancy, meaning it can easily adapt to changing demands and handle failures without significant service disruption.

Cloud Deployment Models

There are three main ways to deploy cloud computing: public, private, and hybrid. A public cloud, like AWS or Azure, is a shared service. Multiple organizations use the same infrastructure, sharing resources and paying only for what they consume. A private cloud, on the other hand, is dedicated to a single organization. It might be hosted on-premises or by a third-party provider, but it’s exclusively for internal use.

Finally, a hybrid cloud combines elements of both public and private clouds, allowing organizations to leverage the benefits of each model. For example, a company might use a private cloud for sensitive data and a public cloud for less critical applications.

Server Hardware and its Role

Servers are the workhorses of IT infrastructure. They’re powerful computers designed to handle many simultaneous requests and provide services to multiple users or applications. Think of a web server hosting a website, a database server storing customer information, or a mail server handling email traffic. These servers can range from small, rack-mounted machines to massive, multi-processor systems housed in data centers.

Key hardware components include powerful CPUs, large amounts of RAM, extensive storage (often using hard drives or SSDs), and high-speed network interfaces. Their role is to provide reliable and efficient access to resources, ensuring applications and services function correctly.

Comparing Servers and Virtualized Cloud Resources

A physical server is a tangible piece of hardware—you can touch it, see it, and even hear its fans whirring. It has a fixed amount of resources: a specific number of CPUs, a certain amount of RAM, and a defined storage capacity. In contrast, a virtualized cloud resource is an abstraction. It’s a virtual machine (VM) that runs on top of the underlying physical server hardware.

This VM has its own virtual CPU, RAM, and storage, but these resources are dynamically allocated and managed by the cloud provider. The key difference is flexibility: a physical server’s resources are fixed, while a cloud VM’s resources can be scaled up or down as needed, making it much more adaptable to changing workloads. For instance, a company could easily increase the RAM allocated to a VM during peak demand and reduce it later, avoiding the cost and hassle of purchasing and installing new physical hardware.

Cost and Scalability

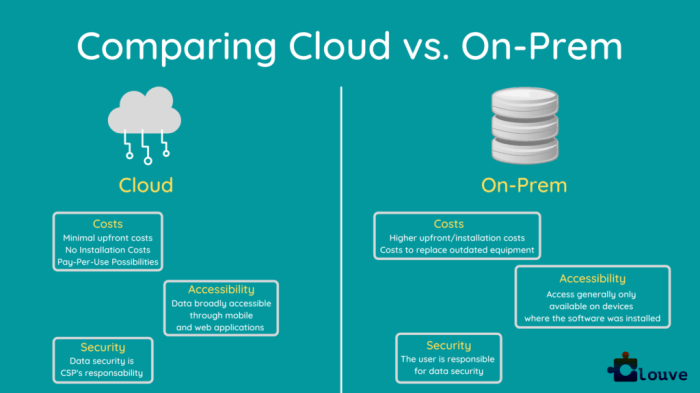

Choosing between cloud computing and on-site servers involves a careful consideration of costs and scalability. While on-site servers represent a significant upfront investment, cloud computing offers a more flexible, pay-as-you-go model. Understanding these differences is crucial for making an informed decision that aligns with your organization’s needs and budget.

Cost Comparison: Cloud vs. On-Premise Servers

The total cost of ownership (TCO) for cloud and on-premise solutions differs significantly across various factors. Upfront costs for on-premise servers include purchasing hardware, software licenses, and setting up the necessary infrastructure. Ongoing expenses encompass maintenance, repairs, energy consumption, and IT staff salaries. Cloud computing, conversely, typically involves subscription fees that scale with usage. This means you only pay for the resources you consume, making it potentially more cost-effective for fluctuating workloads.

Scalability and Resource Allocation

Cloud computing offers unparalleled scalability. You can easily adjust your computing resources—processing power, storage, and bandwidth—up or down based on demand. This agility is invaluable for businesses experiencing fluctuating workloads or seasonal peaks. On-premise servers, however, require significant planning and investment to accommodate growth. Scaling up often involves purchasing and installing new hardware, a process that can be time-consuming and disruptive.

Scaling down is equally challenging, as you’re left with underutilized resources.

Five-Year Total Cost of Ownership Comparison

The following table provides a simplified comparison of the TCO for both cloud and on-premise solutions over a five-year period. Note that these figures are illustrative and can vary significantly based on specific needs and circumstances. For example, a company with a consistently high workload might find on-premise solutions more cost-effective in the long run, while a startup with fluctuating demands would likely benefit from the flexibility of the cloud.

| Cost Factor | On-Premise Servers (USD) | Cloud Computing (USD) | Notes |

|---|---|---|---|

| Initial Hardware Investment | 50,000 | 0 | Includes servers, networking equipment, and storage. |

| Software Licenses | 10,000 | 5,000/year | Annual subscription costs for cloud-based software. |

| IT Personnel | 75,000/year | 25,000/year | Salaries for system administrators and support staff. Cloud providers handle much of the infrastructure management. |

| Energy Consumption | 5,000/year | 1,000/year | Reduced energy consumption due to shared infrastructure and efficient data centers. |

| Maintenance & Repairs | 10,000/year | Included in subscription | Cloud providers handle maintenance and repairs. |

| Total 5-Year Cost | 425,000 | 175,000 | Illustrative figures; actual costs vary significantly. |

Performance and Reliability

Choosing between cloud computing and dedicated servers often hinges on balancing performance needs against reliability requirements. Both offer distinct advantages and disadvantages, impacting application speed, uptime, and overall user experience. This section delves into the performance and reliability characteristics of each approach.

Cloud and on-premise server performance varies significantly depending on several factors, including the specific hardware, network infrastructure, and application design. While cloud providers often boast impressive processing power and bandwidth, latency can be a concern depending on geographic location and network congestion. Dedicated servers, on the other hand, can offer more predictable performance if properly configured and maintained, but scaling resources can be significantly more challenging.

Latency, Bandwidth, and Processing Power Comparison

Latency, the delay in data transmission, is generally lower with dedicated servers located close to the user. Cloud providers, however, leverage geographically distributed data centers and content delivery networks (CDNs) to minimize latency for users worldwide. Bandwidth, the amount of data transmitted per unit of time, is typically more readily scalable in the cloud, allowing for handling traffic spikes effectively.

Processing power is highly configurable in both environments; cloud instances can be scaled up or down as needed, while dedicated servers require upfront investment and planning for processing needs.

Reliability and Uptime Guarantees

Cloud providers usually offer service level agreements (SLAs) guaranteeing high uptime (e.g., 99.9% or higher). These SLAs often include financial compensation for downtime exceeding agreed-upon thresholds. Maintaining high availability with on-site servers, however, requires significant investment in redundant hardware, power backups, and robust monitoring systems. Unexpected hardware failures, power outages, or human error can lead to significant downtime, which is less predictable than cloud-based outages.

This necessitates comprehensive disaster recovery planning.

Load Balancing and Redundancy

Load balancing distributes incoming traffic across multiple servers to prevent overload and ensure consistent performance. Cloud providers typically handle load balancing automatically, using sophisticated algorithms and infrastructure. With dedicated servers, load balancing requires careful configuration and management of hardware and software load balancers. Redundancy, the ability to continue operation even if a component fails, is achieved through techniques like server clustering and data replication.

Cloud providers incorporate redundancy at multiple levels, including multiple data centers and geographically diverse infrastructure. Dedicated servers require careful planning and implementation of redundant systems to achieve similar levels of resilience.

Performance and Reliability Metrics Comparison

| Metric | Cloud Computing | Dedicated Servers |

|---|---|---|

| Latency | Variable, dependent on location and network; generally higher than local servers but mitigated by CDNs | Generally lower, especially for geographically proximate users |

| Bandwidth | Highly scalable, easily adjusted to meet demand | Limited by physical infrastructure; scaling requires significant upfront investment |

| Processing Power | Highly scalable, easily adjusted on demand | Fixed at initial configuration; scaling requires additional hardware |

| Uptime Guarantee | Typically high (e.g., 99.9% or higher) with SLAs | Dependent on infrastructure maintenance and disaster recovery planning; less predictable |

| Load Balancing | Automated and integrated into the cloud platform | Requires manual configuration and management |

| Redundancy | Built-in at multiple levels, including geographically diverse data centers | Requires proactive planning and implementation of redundant systems |

Use Cases and Suitable Applications

Choosing between cloud computing and traditional server environments depends heavily on the specific application’s needs. Factors like scalability requirements, cost sensitivity, and performance demands play crucial roles in this decision. Understanding these nuances is key to optimizing resource allocation and achieving desired outcomes.

Generally, applications requiring rapid scalability, high availability, and cost-effectiveness often benefit from cloud infrastructure. Conversely, applications with stringent security requirements, latency-sensitive operations, or needing very specific hardware configurations might be better suited for on-premise servers.

Cloud-Suitable Applications

Cloud computing excels with applications that need to handle fluctuating workloads or rapid growth. Its pay-as-you-go model makes it financially attractive for projects with unpredictable demand.

Examples include:

- Web Applications: Scalable and easily deployable across multiple regions. Services like AWS Elastic Beanstalk or Google App Engine simplify deployment and management.

- Mobile Backends: Cloud platforms handle the fluctuating demands of mobile users, ensuring responsiveness even during peak times. Firebase and AWS Amplify are popular choices.

- Big Data Analytics: Cloud-based services like AWS EMR or Azure HDInsight provide the infrastructure needed for processing massive datasets. This is often far more cost-effective than building and maintaining your own data center.

- Machine Learning/AI: Cloud providers offer pre-trained models and managed services, significantly reducing the time and cost associated with building and deploying AI solutions. Examples include AWS SageMaker and Google Cloud AI Platform.

Server-Suitable Applications

Traditional server environments remain advantageous for applications demanding high levels of control, security, and consistent performance. These environments often provide greater customization and predictability.

Examples include:

- High-Performance Computing (HPC): Applications requiring massive parallel processing, such as scientific simulations or financial modeling, may benefit from dedicated, high-bandwidth on-premise infrastructure. The predictability and control outweigh the cost of dedicated hardware.

- Real-time Applications: Applications requiring extremely low latency, such as financial trading systems or industrial control systems, often benefit from on-premise servers to minimize network delays. Precise control over network infrastructure is vital.

- Applications with Strict Security Requirements: Highly sensitive data or applications subject to strict regulatory compliance (e.g., HIPAA or PCI DSS) may necessitate on-premise servers to maintain complete control over data security and access.

- Legacy Systems: Older applications may not be easily migrated to the cloud, requiring continued maintenance on existing server infrastructure. Re-architecting can be expensive and time-consuming.

Application Suitability Comparison, Cloud computing verses server

| Application Type | Cloud Suitability | Server Suitability | Rationale |

|---|---|---|---|

| Web Applications (e.g., e-commerce) | High | Medium | Cloud offers easy scalability and cost-effectiveness for handling fluctuating traffic. On-premise can be suitable for very high traffic sites with dedicated infrastructure. |

| Databases (e.g., relational, NoSQL) | High (for many use cases) | Medium (for specific needs) | Cloud-based databases offer managed services and scalability. On-premise is preferred when strict data residency or security requirements exist. |

| High-Performance Computing (e.g., weather modeling) | Medium | High | While cloud HPC exists, dedicated on-premise clusters often provide better performance and control for very demanding applications. |

| Real-time Systems (e.g., financial trading) | Low | High | Latency concerns make on-premise servers the preferred choice for applications requiring extremely low response times. |

Ultimately, the “best” choice between cloud computing and on-site servers depends entirely on your specific needs and priorities. While cloud offers unparalleled scalability and often lower upfront costs, traditional servers can provide greater control and potentially better security in certain contexts. By carefully weighing the factors discussed – cost, scalability, security, performance, and management – you can make a strategic decision that aligns with your business goals and long-term vision.

Remember to factor in your team’s expertise and the complexity of your applications when making your final call. Now go forth and conquer the cloud (or your server room!)

FAQ Explained: Cloud Computing Verses Server

What are some common cloud providers?

Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) are the major players, but many smaller, specialized providers exist.

How do I choose the right cloud deployment model?

It depends on your security needs and control preferences. Public clouds are cost-effective and scalable, while private clouds offer more control but require more investment. Hybrid clouds blend the two.

What about data backup and recovery in the cloud?

Cloud providers offer various backup and recovery solutions, but it’s crucial to understand your responsibilities and choose a plan that meets your Recovery Time Objective (RTO) and Recovery Point Objective (RPO).

Is cloud computing more secure than on-site servers?

It’s not a simple yes or no. Both have vulnerabilities. Cloud providers invest heavily in security, but you still need to implement strong security practices. On-site servers require diligent management of physical and network security.