Cloud computing uses server virtualization group of answer choices—that’s a pretty big question, right? Think of it like this: your favorite apps and online services? They all run on servers, massive computers that store and process data. Server virtualization is basically slicing up one powerful server into many smaller, virtual servers. This allows cloud providers to pack more services onto less hardware, saving money and making everything way more efficient.

It’s the backbone of modern cloud computing, enabling everything from streaming videos to running complex business applications.

This approach offers a ton of advantages. Imagine the flexibility of easily scaling resources up or down depending on demand, like magically adding more processing power when your online game gets a huge influx of players. That’s the power of virtualization! We’ll dive into the specifics of how this works, exploring different virtualization techniques, resource management, security implications, and the cost benefits this amazing technology provides.

Cloud Computing Services Leveraging Server Virtualization

Server virtualization is the backbone of modern cloud computing, enabling the efficient delivery of various services. By abstracting physical hardware into virtual machines (VMs), cloud providers can maximize resource utilization, improve scalability, and offer flexible service models. This allows for a more dynamic and cost-effective approach to IT infrastructure compared to traditional on-premise solutions. Let’s explore how different cloud service models rely on this crucial technology.

Infrastructure as a Service (IaaS) and Server Virtualization, Cloud computing uses server virtualization group of answer choices

IaaS providers, like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), heavily depend on server virtualization to offer their core services. They utilize virtualization to create and manage virtual servers, storage, and networking resources that are then provisioned to customers on demand. This allows for significant flexibility and scalability, as resources can be quickly allocated and deallocated based on customer needs.

- Example: A company needing to rapidly scale its web application during a promotional event can easily provision hundreds of virtual servers within minutes using IaaS. These virtual servers are created and managed by the provider using virtualization technology, eliminating the need for the company to manage physical hardware.

- Use Case: A startup developing a new mobile game might use IaaS to host its game servers. The provider’s virtualization technology allows them to easily scale their server capacity up or down as the game’s popularity changes, ensuring optimal performance and cost efficiency.

Platform as a Service (PaaS) and Server Virtualization

PaaS providers, such as Heroku, Google App Engine, and AWS Elastic Beanstalk, leverage server virtualization to provide a complete platform for application development and deployment. The underlying infrastructure, including servers, operating systems, and databases, is virtualized and managed by the provider. Developers focus solely on building and deploying their applications, without needing to worry about the underlying infrastructure.

- Example: A developer building a web application using a PaaS platform can deploy their code to a virtualized environment provided by the service. The PaaS provider handles the underlying server infrastructure, including operating system updates and security patches, all managed through server virtualization.

- Use Case: A company migrating its legacy application to the cloud might utilize a PaaS offering. The PaaS provider’s virtualization capabilities allow for seamless integration and deployment of the application onto a virtualized environment without extensive changes to the application’s codebase.

Software as a Service (SaaS) and Server Virtualization

Even SaaS providers, such as Salesforce, Google Workspace, and Microsoft 365, indirectly rely on server virtualization. While users don’t directly interact with virtual machines, the underlying infrastructure supporting these applications is almost entirely virtualized. This allows SaaS providers to efficiently manage and scale their services to meet the needs of millions of users.

- Example: When a user accesses their Salesforce account, they are interacting with an application running on a virtualized server infrastructure. Salesforce utilizes server virtualization to manage and scale its application servers to handle the massive number of concurrent users.

- Use Case: A large enterprise deploying a company-wide CRM system might choose a SaaS solution like Salesforce. The underlying server virtualization allows Salesforce to efficiently handle the data and user requests from numerous employees across different locations.

Scalability and Elasticity with Server Virtualized Clouds

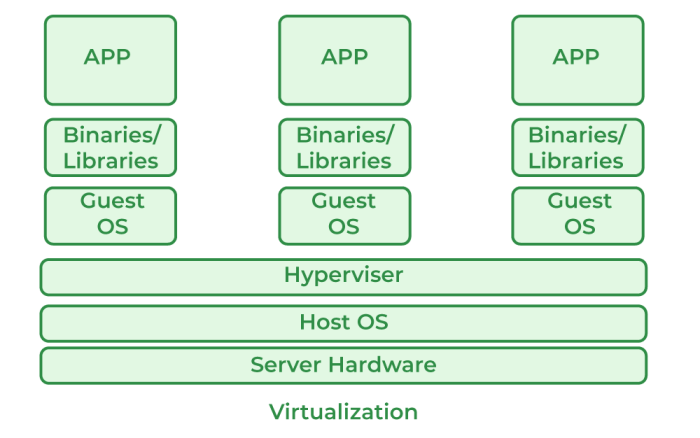

Server virtualization is the backbone of modern cloud computing’s scalability and elasticity. It allows for dynamic resource allocation, enabling cloud providers to efficiently manage and adapt to fluctuating demands. This flexibility translates directly to cost savings and improved performance for users, making it a crucial component of the cloud’s success.Server virtualization allows for the creation of multiple virtual machines (VMs) on a single physical server.

Each VM acts like a standalone computer, with its own operating system and resources. This means that instead of needing a dedicated physical server for each application or workload, multiple applications can run concurrently on the same hardware, maximizing resource utilization. This efficient use of hardware is what fundamentally drives the scalability and elasticity of virtualized cloud environments.

Resource Scaling in Virtualized Clouds

Scaling resources in a virtualized cloud environment is a straightforward process. When demand increases, cloud providers can quickly provision additional VMs to handle the extra load. This “scaling up” can involve adding more CPU cores, RAM, or storage to existing VMs or creating entirely new VMs. Conversely, when demand decreases, unused VMs can be shut down or resources can be reclaimed from existing VMs, reducing costs and improving efficiency.

This “scaling down” process is equally seamless. This dynamic allocation is in stark contrast to traditional, non-virtualized environments, where scaling often requires significant lead time and manual intervention.

Comparison of Virtualized and Non-Virtualized Cloud Infrastructure Scalability

The scalability of virtualized cloud infrastructure significantly surpasses that of non-virtualized environments. In a non-virtualized setup, scaling requires purchasing and configuring new physical servers, a process that can take days or even weeks. This approach is inflexible, expensive, and prone to over-provisioning (purchasing more resources than are actually needed). In contrast, a virtualized cloud can scale up or down in minutes, responding almost instantly to changing demands.

For example, a company experiencing a sudden surge in website traffic during a promotional campaign can easily add more VMs to handle the increased load without significant delays or capital expenditure. The ability to rapidly adapt to fluctuating demand is a major advantage of virtualization.

Adding and Removing Virtual Machines to Handle Fluctuating Workloads

Adding a VM to a virtualized cloud typically involves a simple process initiated through a management console or API. The cloud provider’s infrastructure automatically allocates the necessary resources (CPU, RAM, storage, and network bandwidth) to the new VM. The VM is then configured with the desired operating system and applications. Removing a VM is equally simple. The VM is shut down, and its resources are released back to the pool of available resources, ready for allocation to other VMs.

This process is often automated, allowing for efficient management of resources and cost optimization. Imagine an e-commerce website expecting a spike in traffic around a holiday season. They can pre-configure additional VMs and automatically activate them as traffic increases, ensuring optimal performance and preventing service disruptions. Once the peak demand subsides, these extra VMs can be shut down, eliminating unnecessary costs.

High Availability and Disaster Recovery in Virtualized Clouds: Cloud Computing Uses Server Virtualization Group Of Answer Choices

Server virtualization plays a crucial role in building robust and resilient cloud environments. By abstracting the underlying hardware, virtualization allows for easier management, faster recovery times, and significantly improved high availability and disaster recovery capabilities compared to traditional physical server setups. This enhanced resilience translates to reduced downtime, minimized data loss, and improved business continuity.Virtualized environments offer several advantages for high availability and disaster recovery.

The ability to quickly create and deploy virtual machines (VMs) allows for rapid failover to redundant systems in case of hardware failure or other unforeseen events. Furthermore, the ease of replication and backup of virtual machines simplifies the implementation of disaster recovery strategies. This flexibility and control contribute to a more robust and responsive infrastructure.

Creating Highly Available Virtual Machines and Applications

Achieving high availability for VMs and applications within a virtualized cloud often involves a combination of techniques. Redundancy is key; this can be implemented through the use of multiple virtual machines, each running a copy of the application. These VMs are typically distributed across different physical hosts within the cloud environment to mitigate the risk of a single point of failure.

Load balancing techniques ensure that incoming traffic is distributed evenly across the available VMs, maximizing resource utilization and preventing overload on any single instance. High-availability clusters, often managed by specialized software, automatically detect and respond to failures, seamlessly transferring workloads to healthy VMs to maintain continuous operation. Heartbeat mechanisms and failover scripts are critical components of these systems.

For example, a web application might use a load balancer to distribute traffic across three VMs; if one VM fails, the load balancer automatically redirects traffic to the remaining two, ensuring continued service.

Implementing Disaster Recovery Strategies Using Virtualized Infrastructure

Disaster recovery in virtualized clouds relies heavily on the ability to quickly replicate and restore virtual machines. Common strategies include replication to a geographically separate data center, utilizing cloud-based backup and recovery services, or employing a combination of both. Replication involves creating exact copies of VMs and their associated data in a secondary location. This secondary location can be another data center within the same region or a completely different geographic area, depending on the level of resilience required.

Regular backups are crucial, allowing for restoration of VMs from a known good state in case of catastrophic data loss. These backups can be stored locally, in a secondary data center, or using cloud-based storage solutions. The choice of strategy depends on factors such as recovery time objectives (RTOs) and recovery point objectives (RPOs), which define acceptable downtime and data loss levels.

For instance, a financial institution with stringent RTOs and RPOs might opt for near-real-time replication to a geographically diverse data center.

Implementing a Failover Mechanism in a Virtualized Cloud Environment

Implementing a failover mechanism in a virtualized cloud involves a structured approach.

- Identify Critical Applications and VMs: Begin by pinpointing the applications and VMs crucial for business continuity. These are the ones that need the highest level of protection.

- Choose a Replication Strategy: Decide on a suitable replication method (e.g., synchronous or asynchronous replication) based on your RTO and RPO requirements. Synchronous replication ensures near-zero data loss but can impact performance, while asynchronous replication prioritizes performance but introduces a slight lag.

- Configure Replication: Set up the chosen replication technology, ensuring the accurate and timely replication of data to the secondary site.

- Test the Failover Process: Conduct regular failover drills to validate the functionality and efficiency of the disaster recovery plan. This helps identify potential weaknesses and allows for necessary adjustments.

- Monitor the System: Implement monitoring tools to track the health and performance of both primary and secondary sites. This proactive approach allows for early detection of potential issues.

- Document the Process: Maintain comprehensive documentation outlining the entire failover procedure, including contact information, recovery steps, and any specific instructions.

So, there you have it: server virtualization isn’t just some tech jargon; it’s the engine driving the cloud revolution. By efficiently managing resources, boosting security, and allowing for seamless scalability, it’s the key to the cost-effective and powerful cloud services we rely on daily. From the everyday apps on your phone to the complex infrastructure of large corporations, understanding server virtualization is key to grasping the inner workings of the modern digital world.

It’s a game-changer, plain and simple.

Question & Answer Hub

What’s the difference between Type 1 and Type 2 hypervisors?

Type 1 hypervisors (like VMware ESXi or Xen) run directly on the server’s hardware, while Type 2 hypervisors (like VirtualBox or VMware Workstation) run on top of an existing operating system.

How does server virtualization improve security?

Virtualization allows for better isolation of VMs, reducing the impact of a security breach on the entire system. It also enables features like snapshots and rollback, facilitating easier recovery from attacks.

Is server virtualization more expensive than physical servers?

Initially, there might be some setup costs, but long-term, server virtualization generally leads to lower TCO due to reduced hardware needs, energy consumption, and administrative overhead.

What are some common challenges of server virtualization?

Resource contention (multiple VMs competing for resources), managing complex configurations, and ensuring consistent security across all VMs are common challenges.